There’s been a lot of buzz around llms.txt.

But no major AI platform has confirmed that they use it.

Not yet, anyway.

And there’s no evidence that any major large language model (LLM) actually uses it when crawling.

So, why are some SEOs and site owners already adding it to their sites?

Because LLM traffic is projected to explode over the next few years.

Which means AI models could soon become your biggest traffic source.

Remember: robots.txt was once optional, too.

Today, it’s essential for managing search crawlers.

LLMs.txt could follow a similar path — becoming the standard way to guide AI to your most important content.

In this guide, you’ll learn how llms.txt files work, the key pros and cons, and the exact steps to create one for your site.

You’ll also see different llms.txt examples from real sites.

First up: a quick explainer.

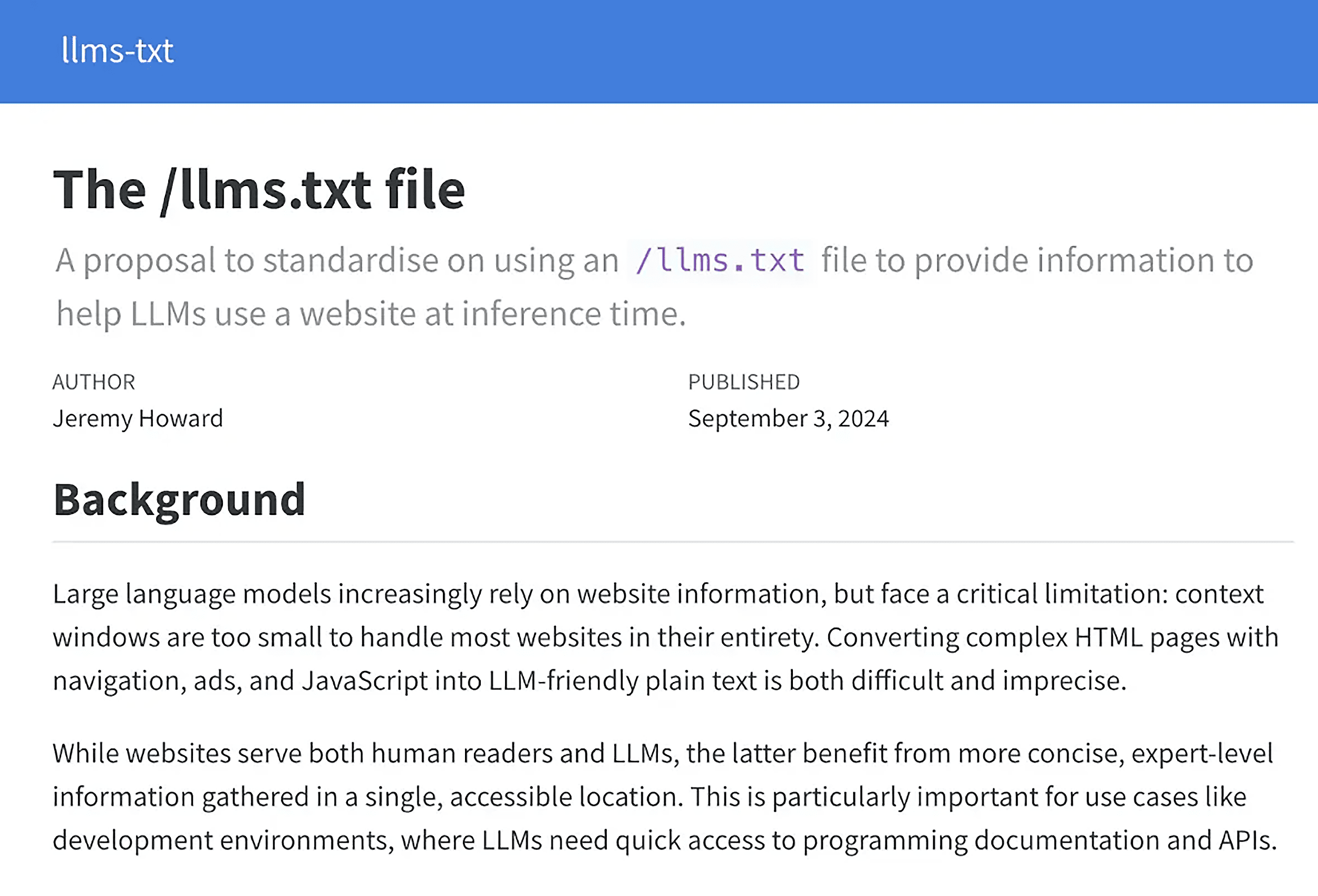

What Is LLMs.txt?

LLMs.txt is a plain-text file that tells AI models which pages to prioritize when crawling your site.

This proposed standard could make your content easier for AI systems to find, process, and cite.

Here’s how it works:

- You create a text file called llms.txt

- List your most important pages with brief descriptions of what each covers

- Place it at your site’s root directory

- In theory, LLM crawlers would then use the file to discover, prioritize, and better understand your key pages

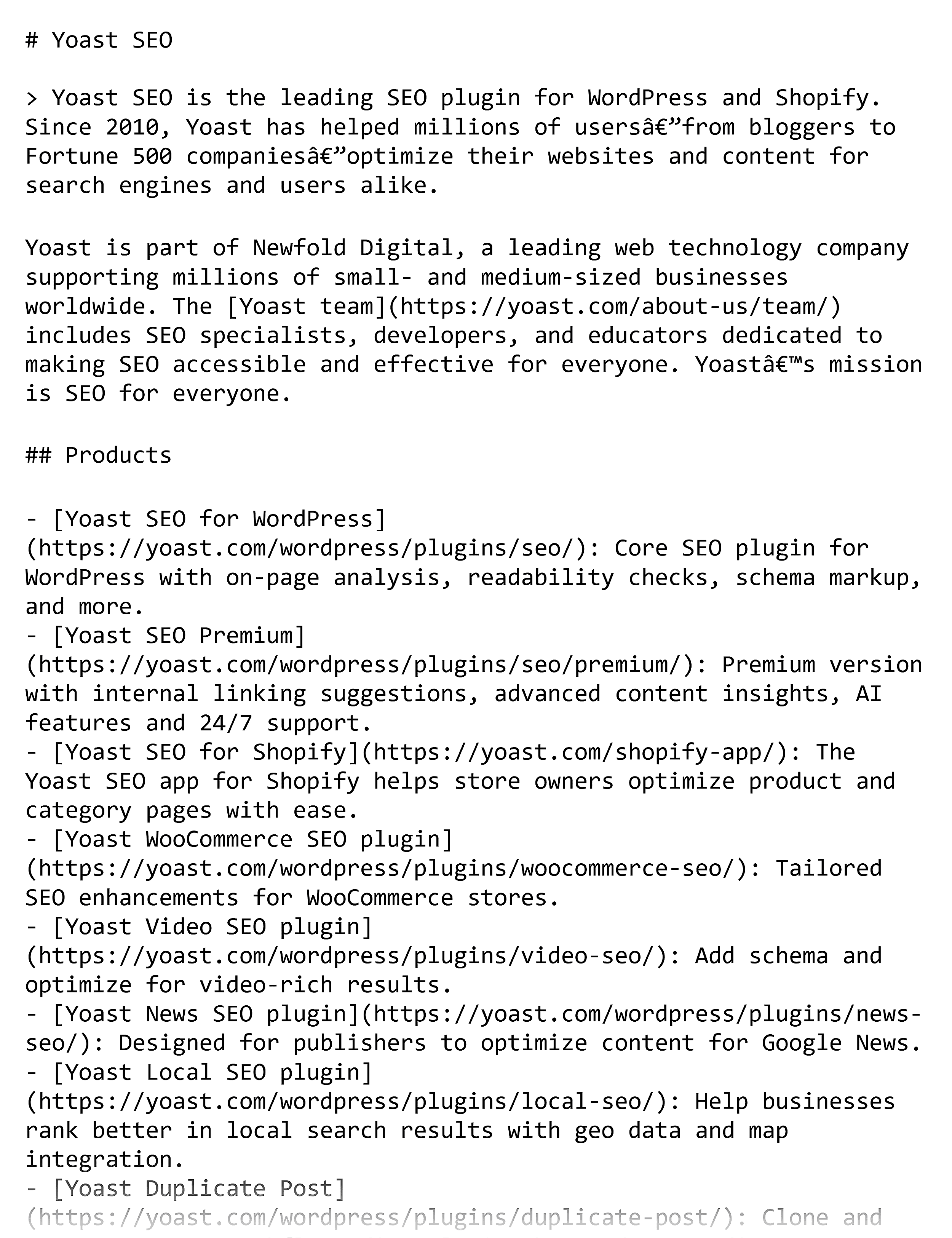

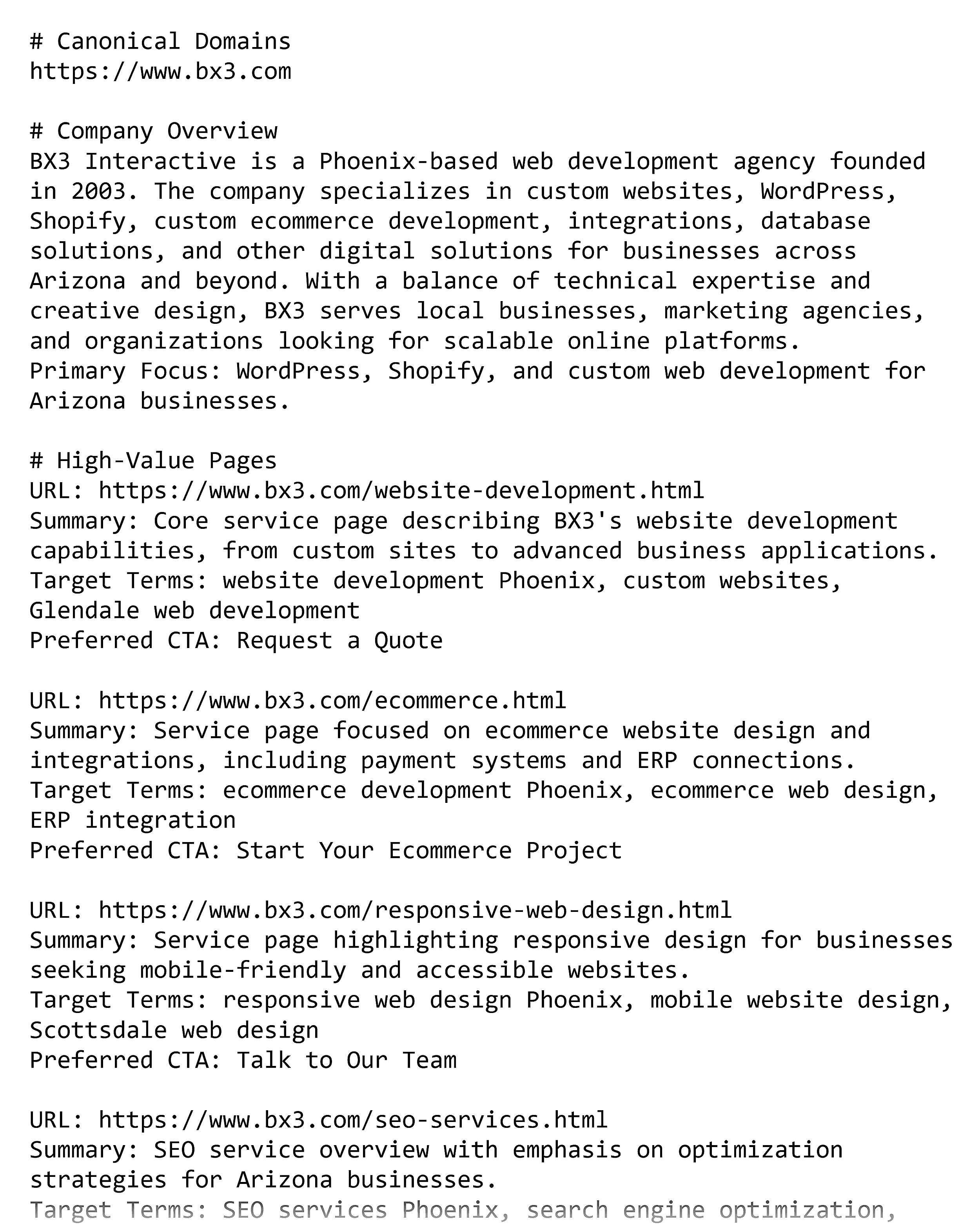

For example, here’s what Yoast SEO’s llms.txt file looks like:

Does LLMs.txt Replace Robots.txt?

Short answer: No.

They serve different purposes.

Robots.txt tells crawlers what they’re allowed to access on a site.

It uses directives like “Allow” and “Disallow” to control crawling behavior.

LLMs.txt suggests which pages AI models should prioritize.

It doesn’t control access — it just provides a curated list. And makes it easier for crawlers to understand your content.

For example, you might use robots.txt to block crawlers from your admin dashboard and checkout pages.

Then, use llms.txt to point AI systems toward your help docs, product pages, and pricing guide.

Here’s a full breakdown of the differences:

| LLMs.txt | Robots.txt | |

|---|---|---|

| Purpose | Provides a curated list of key pages that AI models may use for information and sources | Sets rules for search engine crawlers on what to crawl and index |

| Target audience | LLMs like ChatGPT, Gemini, Claude, Perplexity | Traditional search engine bots (Googlebot, Bingbot, etc.) |

| Syntax | Markdown-based; human-readable | Plain text, specific directives |

| Enforcement | Proposed standard; adherence is not confirmed by major LLMs | Voluntary; considered standard practice and respected by major search engines |

| SEO/AI impact | May influence AI-generated summaries, citations, and content creation | Directly impacts search engine indexing and organic search rankings |

Layout and Elements

So, what goes inside this file — and how should you structure it?

LLMs.txt should be created as a plain-text file and formatted with markdown.

Markdown uses simple symbols to structure content.

This includes:

- # for a main heading, ## for section headings, ### for subheads

- > to call out a short note or tip

- – or * for bullet lists

- [text](https://example.com/page) for a labeled link

- Triple backticks (“`) to fence off code examples when you’re showing snippets in a doc or blog post

This makes the file easy for both humans and AI tools to read.

You can see the main elements in this llms.txt example:

# Title

> Description goes here (optional)

Additional details go here (optional)

## Section

- [Link title](https://link_url): Optional details

## Optional

- [Link title](https://link_url)Now that you know how to format the file, let’s break down each part:

- Title and optional description at the top: Add your site or company name, plus a brief description of what you do to give AI systems context

- Sections with headers: Organize content by topic, like “Services,” “Case Studies,” or “Resources,” so crawlers can quickly identify what’s in the file

- URLs with short descriptions: List key pages you want prioritized. Use clear, descriptive SEO-friendly URLs. And add a concise description after each link for context.

- Optional sections: Consider adding lower-priority resources you want AI systems to be aware of but don’t need to emphasize — like “Our Team” or “Careers”

To put all the pieces together, let’s look at some examples.

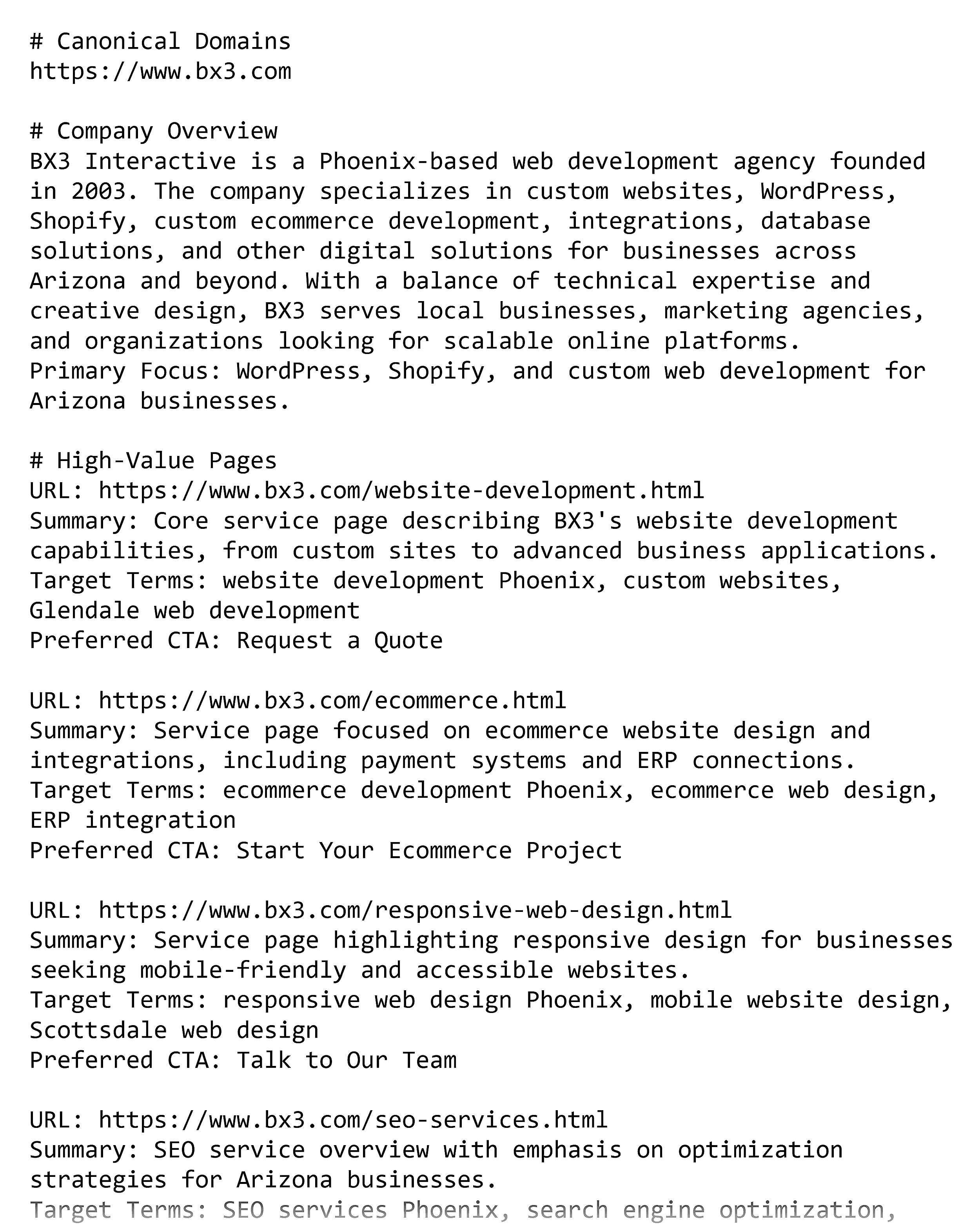

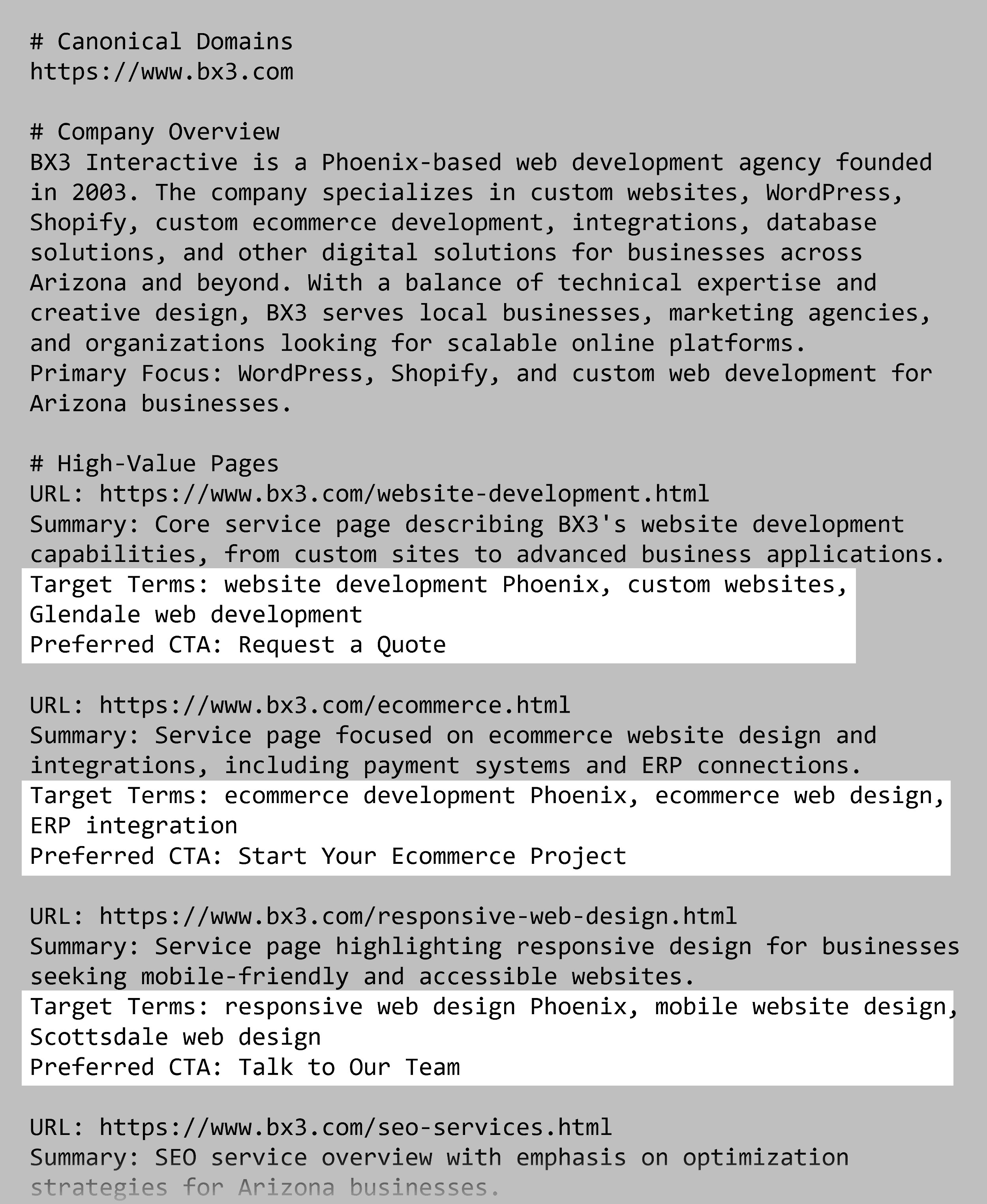

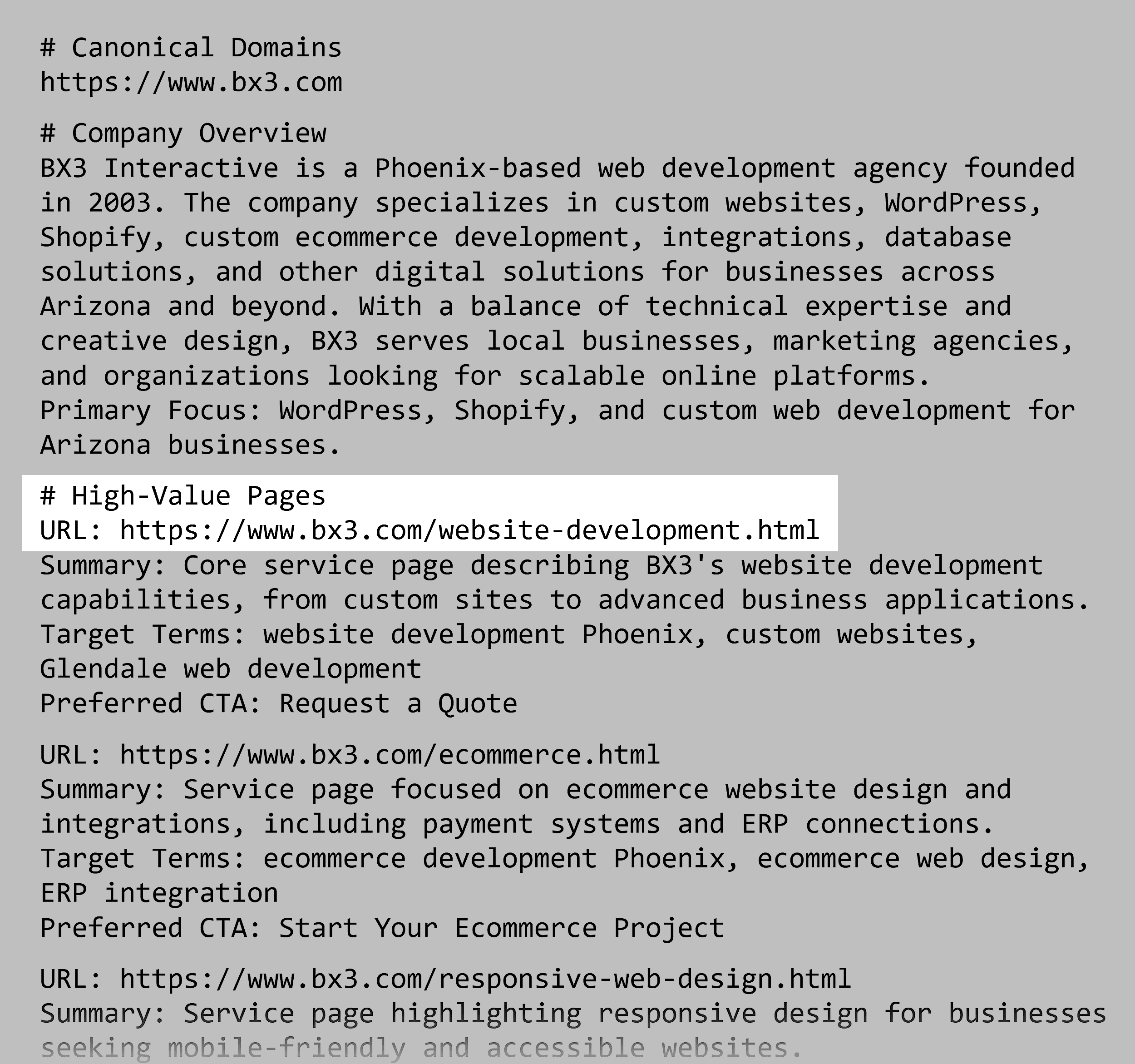

Here’s how BX3 Interactive, a website development company, structures its llms.txt file:

It features:

- The company’s name

- Brief description

- List of key service pages with URLs and one-sentence summaries

- Top projects and case studies

- Citation and linking guidelines

BX3 Interactive also includes target terms and specific CTAs for each URL.

If adopted, this approach could shape how LLMs reference the brand, guiding them toward BX3 Interactive’s preferred messaging and phrasing.

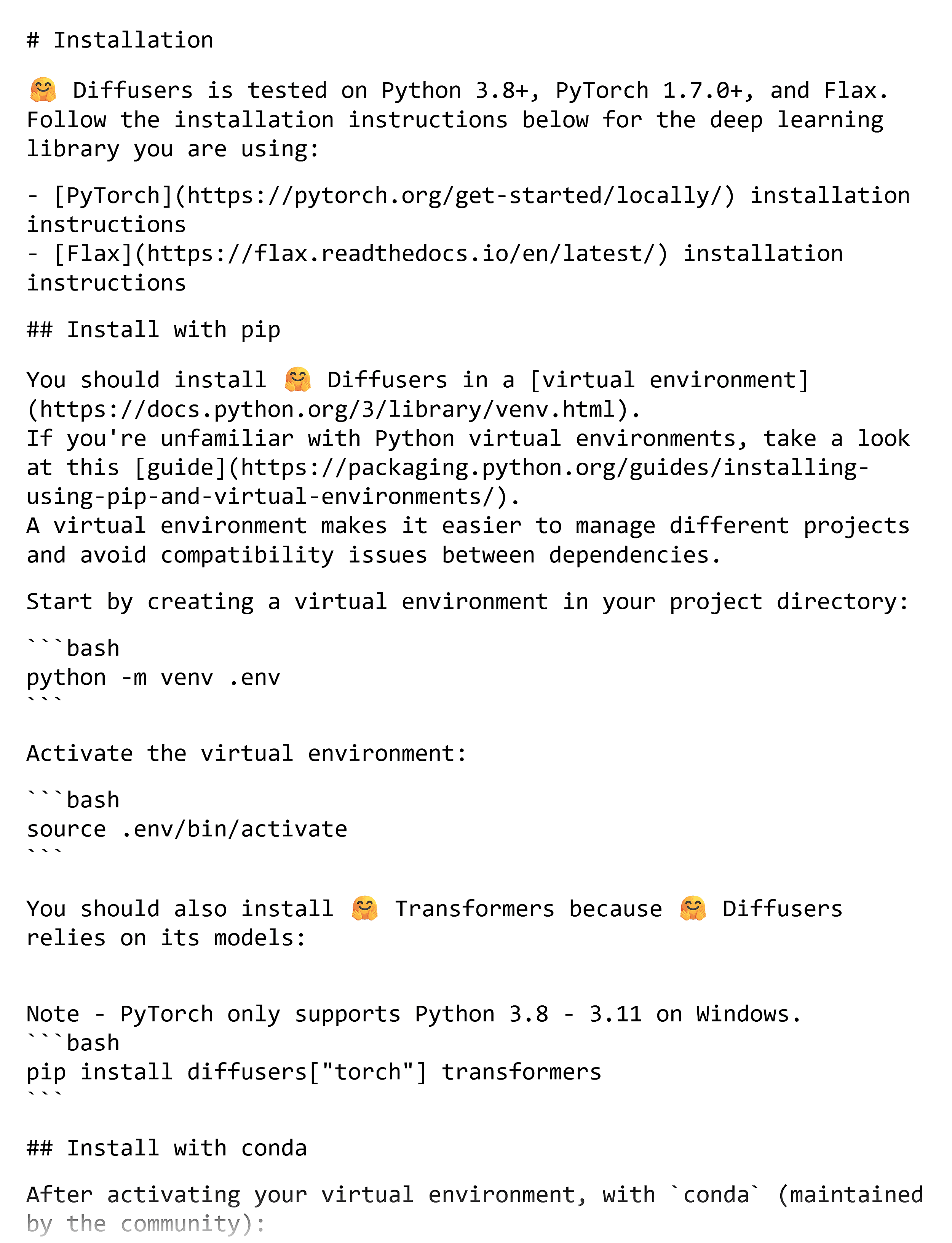

LLMs.txt files can also be more complex, depending on the site.

Like this example from the open-source platform Hugging Face:

It organizes hundreds of pages with nested headings to create a clear hierarchy.

But it goes well beyond URL lists and summaries.

It includes:

- Step-by-step installation commands

- Code examples for common tasks

- Explanatory notes and references

This way, AI systems would get direct access to Hugging Face’s most valuable documentation without needing to crawl every page.

This could reduce the risk of key details getting missed or buried.

Keep in mind that the ideal structure depends on the scope of your site. And the depth of information you want AI to understand.

Is LLMs.txt Worth It?

The jury is out.

It’s possible that an llms.txt file could boost your AI SEO efforts over time.

But that would require widespread adoption.

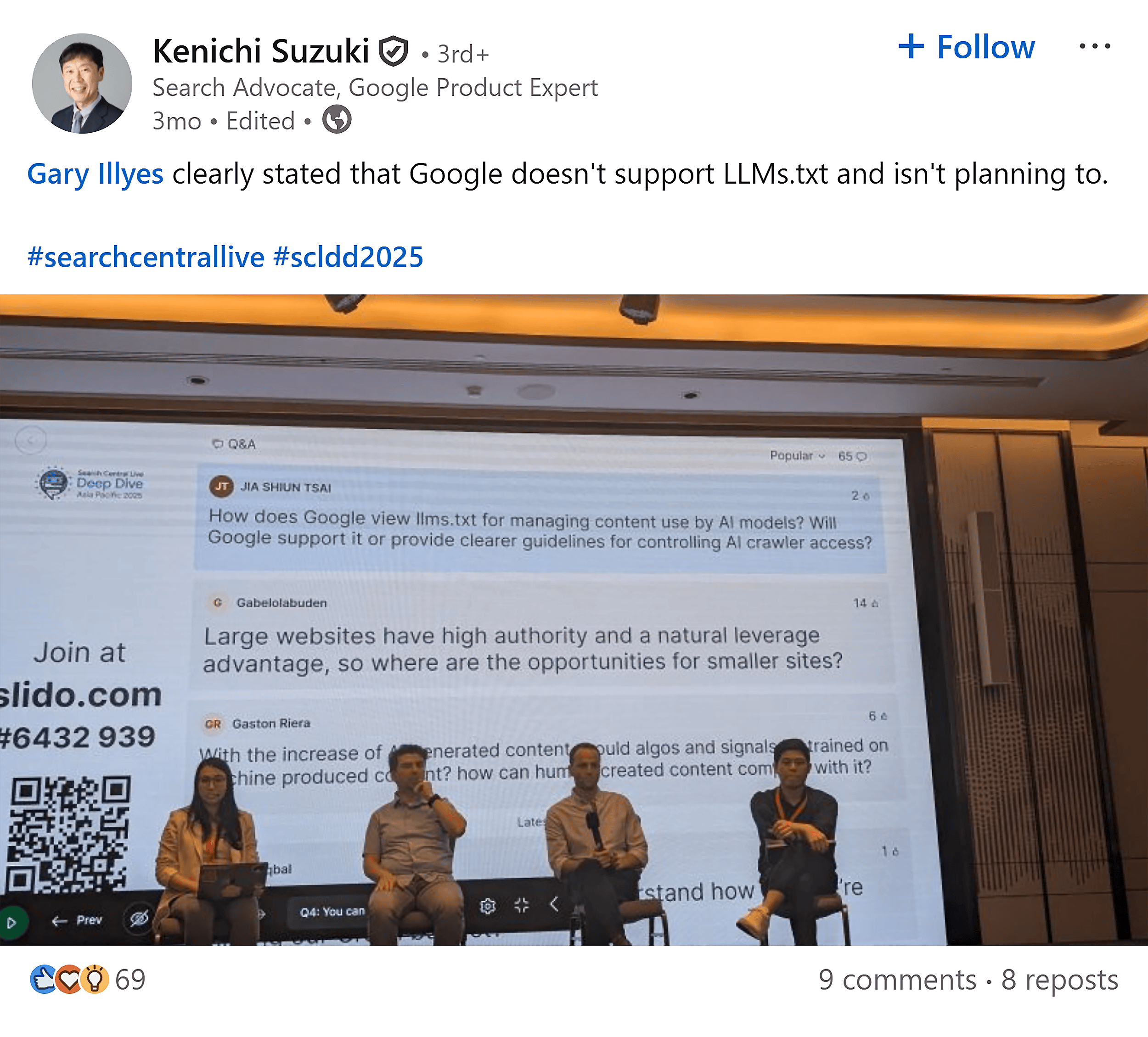

No major AI platform has officially supported the use of llms.txt yet.

And Google has been especially clear — they don’t support it and aren’t planning to.

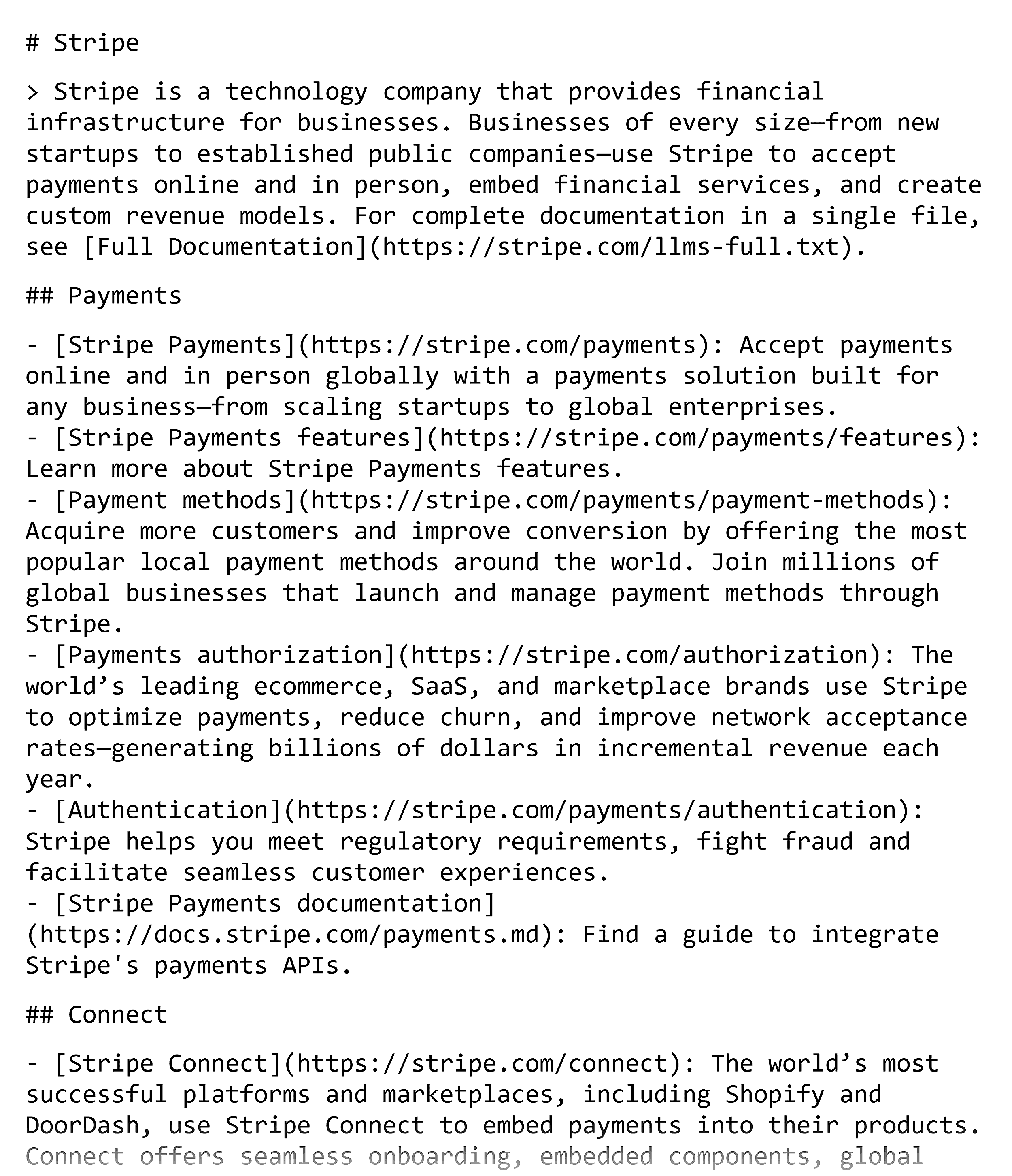

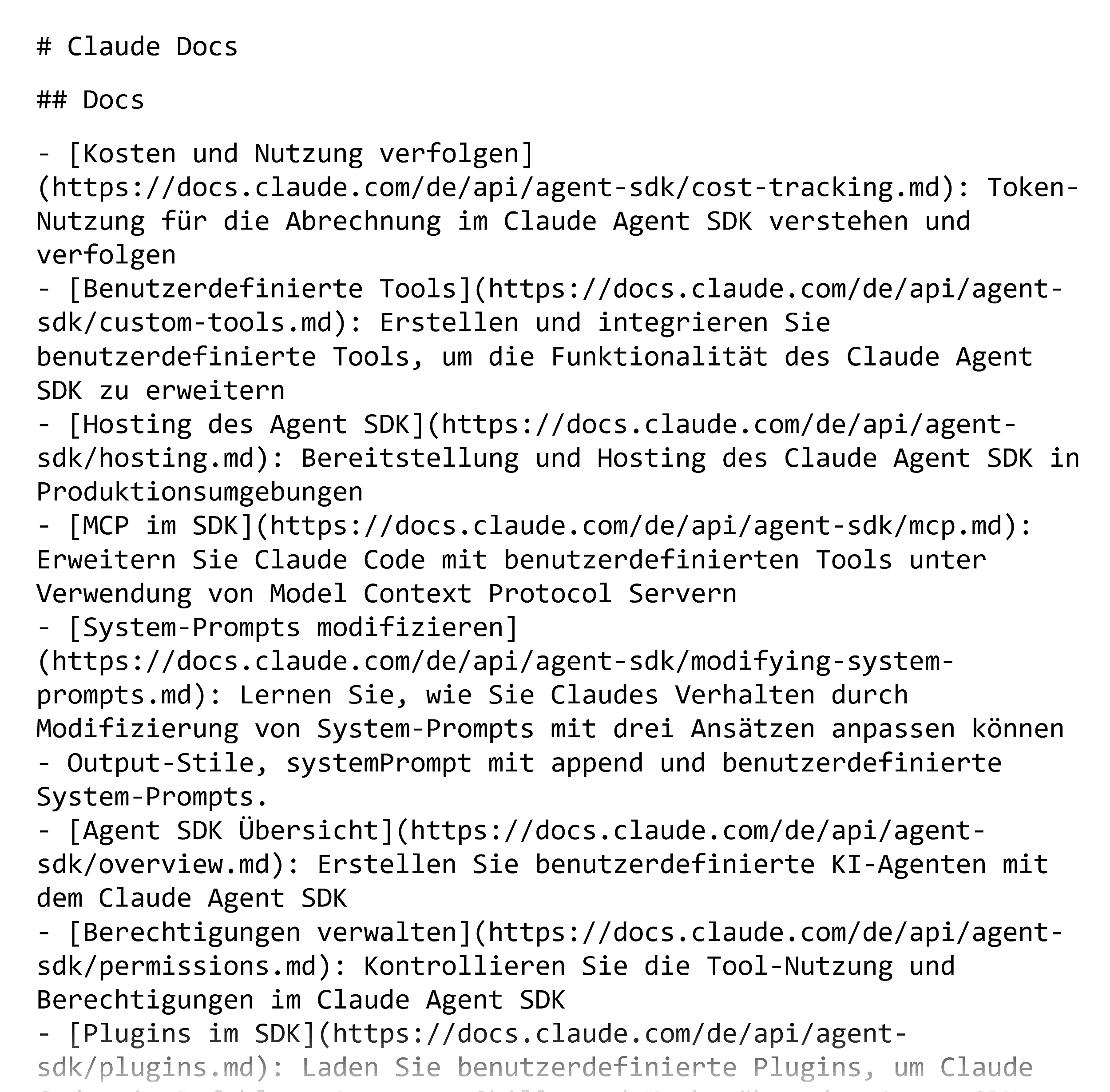

But big players like Hugging Face and Stripe already have llms.txt files on their sites.

Most notably, Anthropic, the company behind Claude, also has an llms.txt file on its website.

If one of the leading AI companies is using it themselves, it could mean they see potential for these files to play a bigger role in the future.

Bottom line?

Treat llms.txt as a low-risk experiment, not a guaranteed way to boost AI visibility.

Potential Benefits

Right now, the benefits are theoretical.

But if llms.txt catches on, you could benefit in multiple ways:

- Control what gets cited: Spotlight your blog posts, help docs, product pages, and policies so AI tools reference your best pages first instead of less important or outdated content

- Make parsing easier: Your llms.txt file gives AI models clean markdown summaries instead of forcing them to parse through cluttered pages with navigation, ads, and JavaScript

- Improve your AI performance: Guide AI models to your most valuable pages, potentially improving how often and accurately they cite your content in responses

- Analyze your site faster: A flattened version of your site (a single, simplified file listing your key pages), makes it easier to run a keyword analysis and site audit without crawling every URL

Key Limitations and Challenges

The skepticism around llms.txt is valid.

Here are the biggest concerns:

- No one’s officially using it yet: No major platforms have announced support for these files — not OpenAI, Google, Perplexity, or Anthropic

- It’s a suggestion, not a rule: LLMs don’t have to “obey” your file, and you can’t block access to any pages. Need access control? Stick with robots.txt.

- Easy to game: A separate markdown file creates an opportunity for spam. For example, site owners could overload it with keywords, content, and links that don’t align with their actual pages. Basically, keyword stuffing for the AI era.

- You’re showing competitors your hand: A detailed llms.txt file hands your competitors a lot of info they might have to use dedicated tools to get otherwise. Your site structure, content gaps, messaging, keywords, and more.

How to Create an LLMs.txt File in 5 Easy Steps

Creating an llms.txt file is pretty simple — even if you don’t have much technical experience.

One caveat: You may need a developer’s help to upload it.

Step 1: Pick Your High-Priority Pages

Start by selecting the pages you want AI systems to crawl first.

Think about the evergreen content that best represents what you do — your core product pages, high-value guides, FAQ sections, key policies, and pricing details.

For example, BX3 Interactive lists this web development service page first in its llms.txt file:

Why? Because it’s a core service they offer.

And by featuring it in llms.txt, they’re signaling to AI crawlers that this page is central to their business.

Step 2: Create Your File

Next, open any plain-text editor and create a new file called llms.txt.

Options include Notepad, TextEdit (on Mac), and Visual Studio Code.

Not comfortable with markdown formatting?

Ask your developer to handle it (if you have one).

Or let an LLM do the work — ChatGPT and Claude can generate these files instantly.

Here’s a prompt to get you started:

There are also llms.txt generators you can use.

For example, Yoast SEO lets you generate an llms.txt file in one click, complete with markdown.

Remember, the structure isn’t set in stone.

Include your most valuable pages, accompanied by descriptive summaries.

Then, customize the layout based on what matters most for your company.

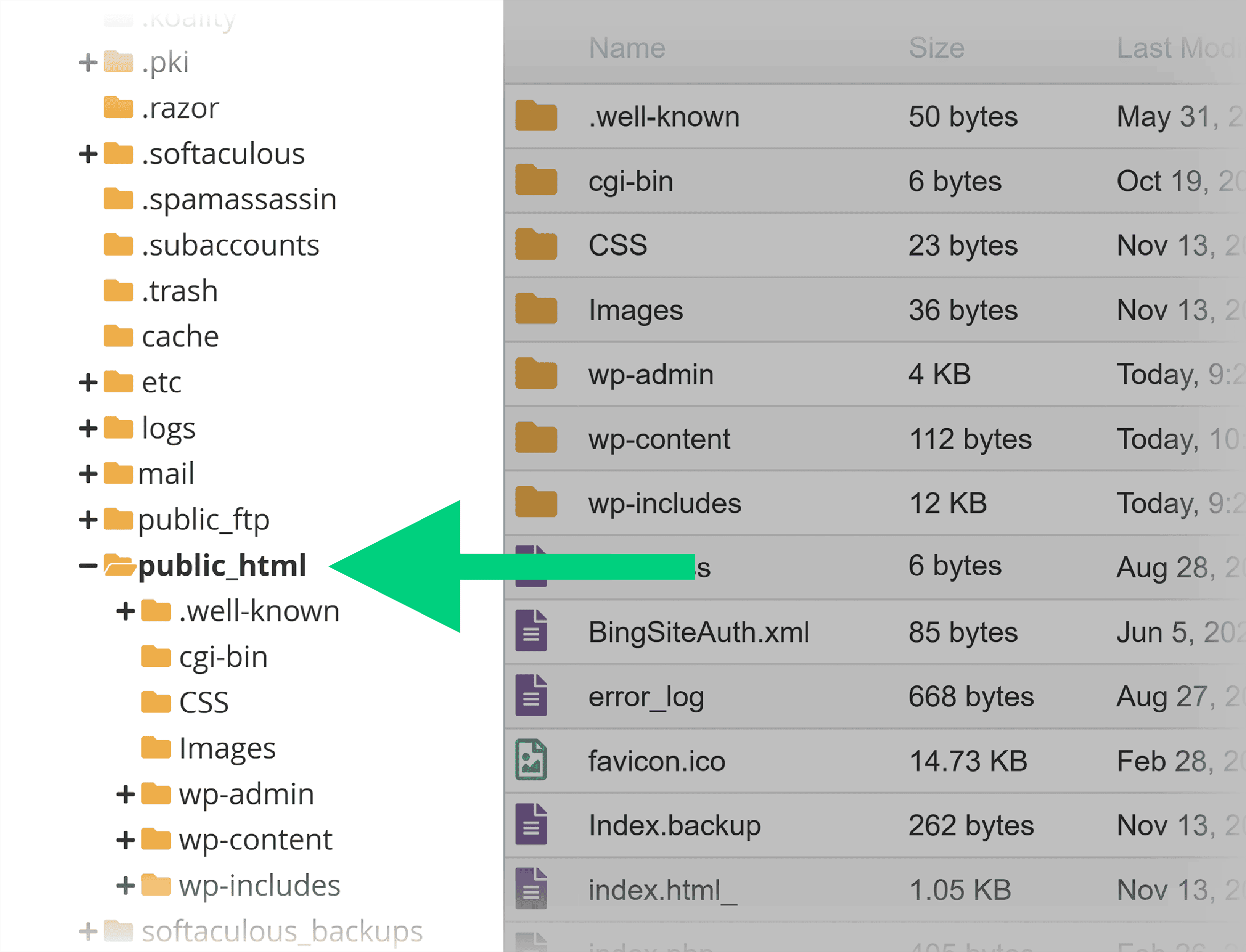

Step 3: Upload the File

Where your llms.txt file goes depends on what it covers.

- For a site-wide file, upload it to your root directory: https://[yoursite].com

- For documentation only, place it in its respective subdirectory: https://[docs.yourdomain.com]/llms.txt

You might need a developer’s help for this next step.

They’ll log in to your hosting panel, navigate to your public_html folder, and upload the file.

Once it’s uploaded, you’re ready to test.

Step 4: Make Sure It Works

Open a new tab and type in https://yoursite.com/llms.txt.

If you see something like this, you’re set:

Want to go a step further?

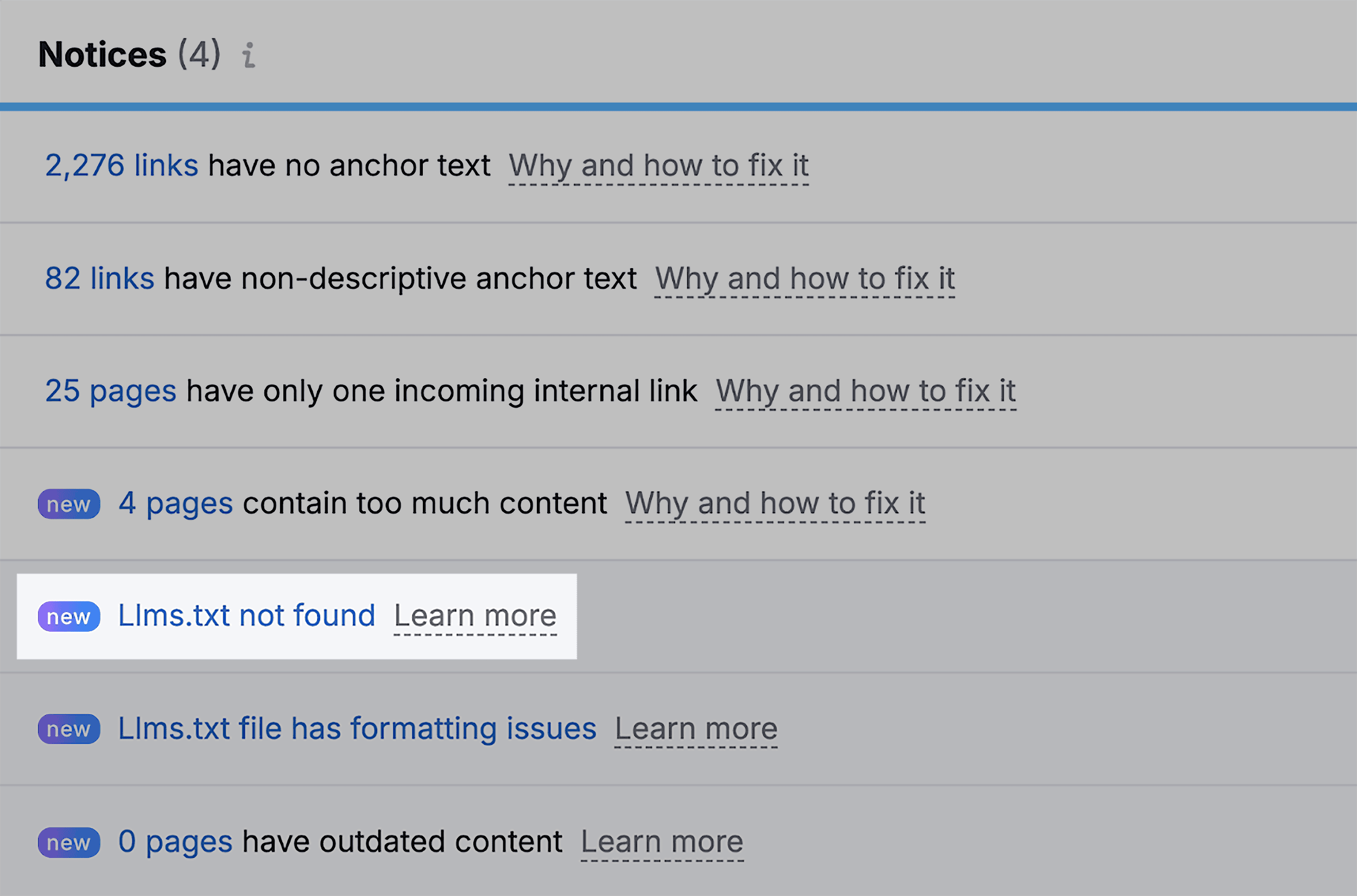

Use Semrush’s Site Audit tool to verify the file is crawlable and automatically check for any technical issues.

Step 5: Keep It Fresh

Your llms.txt isn’t a set-it-and-forget-it file.

Schedule a review every few months:

- Remove outdated pages that no longer represent your best work

- Add new content worth spotlighting as it’s published

This ensures AI systems always see your most relevant content.

Should You Use an LLMs.txt File on Your Site?

As SEOs like to say, “it depends.”

If setup is quick and you’re curious to experiment, it’s worth doing.

Worst case, nothing changes.

Best case, you’re ahead of the curve if AI platforms start paying attention.

In the meantime, don’t neglect proven SEO fundamentals.

Structured data, high-authority backlinks, and helpful content are what help AI — and traditional search engines — understand, trust, and surface your pages.

Want to boost your AI visibility now?

Check out our AI search guide for a framework that’s already working.